Robots are more and more often used to do standardized work. For example, in 1878 the very first telephone operator jobs arose, but since the late 1960’s the job is history. Nowadays when you call somebody the call is automatically connected by a machine. Not only telephone operators, but more and more jobs are being replaced by robots. Are we as humans even necessary in the future for work processes? Are robots taking over the world without us even knowing it?

Many researchers are now developing new robots and with that comes the autonomy of the robots. Self-driving cars, computers winning at ‘Jeopardy!’ an American television gameshow and even digital assistance like Siri, Google Now and Cortana. Scientists are able to create more and more advanced artificial intelligence (AI). The big question here is ‘how do you give values to an autonomous device?’. Future autonomous robots maybe able to cure people in very contagious areas, where risks are too high for normal humans or during national disasters, where robots autonomously search for survivors. Autonomous robots can have many useful purposes, but how do we actually make sure that robots won’t be used for bad purposes?

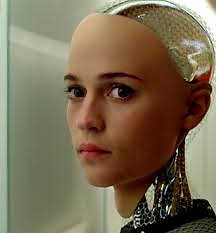

There are many movies where robots are being used for the good, but in many movies robots are projected as the downfall to humanity. Even Stephen Hawking warns that robots may be disastrous in his interview about the movie Transcendence[1]. In that movie, a scientist uploads his consciousness in his own created artificial intelligence machine. In this virtual form he has connection to the internet and thus the capability to learn everything in just a few seconds. With that capability he is able to control markets hack into databases and eventually sets up his own technologic utopia in the desert. His urge of power makes him create nanoparticles from which he can read and control human minds. Of course this is just a movie, but it is certainly something we must take into account while developing new and more complex AI’s.

For this reason a consortium of scientists and inventors (including Elon Musk and Stephen Hawking) have recently drafted an open letter on research priorities for artificial intelligence (AI). Among the stated goals are maximizing the benefits from AI while minimizing pitfalls that could endanger humans and even humanity. In the movies like Transcendence often a bottom up approach of the value sensitive design (VSD) is used. They design first the machine itself and then look what it can do. They find out they forget the value and norms created by humanity. The consortium of scientists and inventors actually says to first set the values and norms before defining the design requirements instead of the other way around.

Robots with AI can be very helpful in the future if we design them for the right thing.

[1] http://www.independent.co.uk/news/science/stephen-hawking-transcendence-looks-at-the-implications-of-artificial-intelligence-but-are-we-taking-9313474.html